Is the United States truly ready for the seismic shift in modern warfare—a transformation that The New Yorker‘s veteran war correspondent describes not as evolution but as rupture? In “Is the U.S. Ready for the Next War?” (July 14, 2025), Dexter Filkins captures this tectonic realignment through a mosaic of battlefield reportage, strategic insight, and ethical reflection. His central thesis is both urgent and unsettling: that America, long mythologized for its martial supremacy, is culturally and institutionally unprepared for the emerging realities of war. The enemy is no longer just a rival state but also time itself—conflict is being rewritten in code, and the old machines can no longer keep pace.

The piece opens with a gripping image: a Ukrainian drone factory producing a thousand airborne machines daily, each costing just $500. Improvised, nimble, and devastating, these drones have inflicted disproportionate damage on Russian forces. Their success signals a paradigm shift—conflict has moved from regiments to swarms, from steel to software. Yet the deeper concern is not merely technological; it is cultural. The article is less a call to arms than a call to reimagine. Victory in future wars, it suggests, will depend not on weaponry alone, but on judgment, agility, and a conscience fit for the digital age.

Speed and Fragmentation: The Collision of Cultures

At the heart of the analysis lies a confrontation between two worldviews. On one side stands Silicon Valley—fast, improvisational, and software-driven. On the other: the Pentagon—layered, cautious, and locked in Cold War-era processes. One of the central figures is Palmer Luckey, the founder of the defense tech company Anduril, depicted as a symbol of insurgent innovation. Once a video game prodigy, he now leads teams designing autonomous weapons that can be manufactured as quickly as IKEA furniture and deployed without extensive oversight. His world thrives on rapid iteration, where warfare is treated like code—modular, scalable, and adaptive.

This approach clashes with the military’s entrenched bureaucracy. Procurement cycles stretch for years. Communication between service branches remains fractured. Even American ships and planes often operate on incompatible systems. A war simulation over Taiwan underscores this dysfunction: satellites failed to coordinate with aircraft, naval assets couldn’t link with space-based systems, and U.S. forces were paralyzed by their own institutional fragmentation. The problem wasn’t technology—it was organization.

What emerges is a portrait of a defense apparatus unable to act as a coherent whole. The fragmentation stems from a structure built for another era—one that now privileges process over flexibility. In contrast, adversaries operate with fluidity, leveraging technological agility as a force multiplier. Slowness, once a symptom of deliberation, has become a strategic liability.

The tension explored here is more than operational; it is civilizational. Can a democratic state tolerate the speed and autonomy now required in combat? Can institutions built for deliberation respond in milliseconds? These are not just questions of infrastructure, but of governance and identity. In the coming conflicts, latency may be lethal, and fragmentation fatal.

Imagination Under Pressure: Lessons from History

To frame the stakes, the essay draws on powerful historical precedents. Technological transformation has always arisen from moments of existential pressure: Prussia’s use of railways to reimagine logistics, the Gulf War’s precision missiles, and, most profoundly, the Manhattan Project. These were not the products of administrative order but of chaotic urgency, unleashed imagination, and institutional risk-taking.

During the Manhattan Project, multiple experimental paths were pursued simultaneously, protocols were bent, and innovation surged from competition. Today, however, America’s defense culture has shifted toward procedural conservatism. Risk is minimized; innovation is formalized. Bureaucracy may protect against error, but it also stifles the volatility that made American defense dynamic in the past.

This critique extends beyond the military. A broader cultural stagnation is implied: a nation that fears disruption more than defeat. If imagination is outsourced to private startups—entities beyond the reach of democratic accountability—strategic coherence may erode. Tactical agility cannot compensate for an atrophied civic center. The essay doesn’t argue for scrapping government institutions, but for reigniting their creative core. Defense must not only be efficient; it must be intellectually alive.

Machines, Morality, and the Shrinking Space for Judgment

Perhaps the most haunting dimension of the essay lies in its treatment of ethics. As autonomous systems proliferate—from loitering drones to AI-driven targeting software—the space for human judgment begins to vanish. Some militaries, like Israel’s, still preserve a “human-in-the-loop” model where a person retains final authority. But this safeguard is fragile. The march toward autonomy is relentless.

The implications are grave. When decisions to kill are handed to algorithms trained on probability and sensor data, who bears responsibility? Engineers? Programmers? Military officers? The author references DeepMind’s Demis Hassabis, who warns of the ease with which powerful systems can be repurposed for malign ends. Yet the more chilling possibility is not malevolence, but moral atrophy: a world where judgment is no longer expected or practiced.

Combat, if rendered frictionless and remote, may also become civically invisible. Democratic oversight depends on consequence—and when warfare is managed through silent systems and distant screens, that consequence becomes harder to feel. A nation that no longer confronts the human cost of its defense decisions risks sliding into apathy. Autonomy may bring tactical superiority, but also ethical drift.

Throughout, the article avoids hysteria, opting instead for measured reflection. Its central moral question is timeless: Can conscience survive velocity? In wars of machines, will there still be room for the deliberation that defines democratic life?

The Republic in the Mirror: A Final Reflection

The closing argument is not tactical, but philosophical. Readiness, the essay insists, must be measured not just by stockpiles or software, but by the moral posture of a society—its ability to govern the tools it creates. Military power divorced from democratic deliberation is not strength, but fragility. Supremacy must be earned anew, through foresight, imagination, and accountability.

The challenge ahead is not just to match adversaries in drones or data, but to uphold the principles that give those tools meaning. Institutions must be built to respond, but also to reflect. Weapons must be precise—but judgment must be present. The republic’s defense must operate at the speed of code while staying rooted in the values of a self-governing people.

The author leaves us with a final provocation: The future will not wait for consensus—but neither can it be left to systems that have forgotten how to ask questions. In this, his work becomes less a study in strategy than a meditation on civic responsibility. The real arsenal is not material—it is ethical. And readiness begins not in the factories of drones, but in the minds that decide when and why to use them.

THIS ESSAY REVIEW WAS WRITTEN BY AI AND EDITED BY INTELLICUREAN.

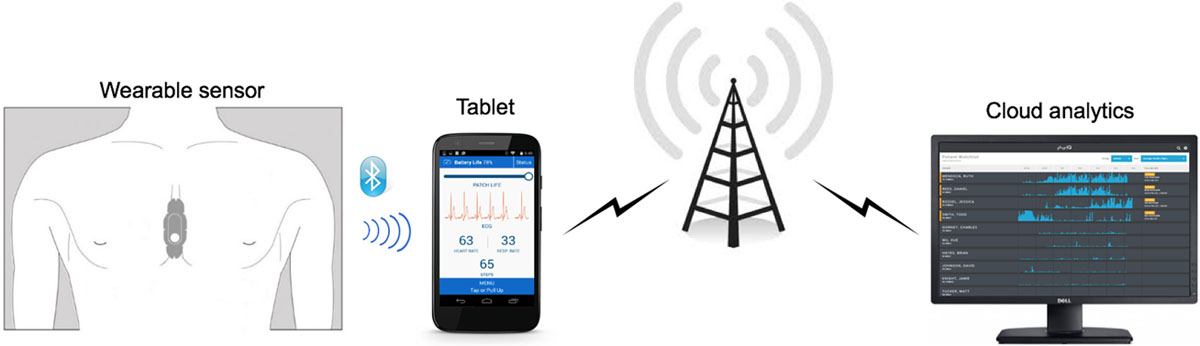

The study shows that wearable sensors coupled with machine learning analytics have predictive accuracy comparable to implanted devices.

The study shows that wearable sensors coupled with machine learning analytics have predictive accuracy comparable to implanted devices.

This week, machine learning helps batteries charge faster, and using bacterial nanowires to generate electricity from thin air.

This week, machine learning helps batteries charge faster, and using bacterial nanowires to generate electricity from thin air.

Researchers are using artificial intelligence techniques to invent medicines and materials—but in the process are they upending the scientific method itself? The AI approach is a form of trial-and-error at scale, or “radical empiricism”. But does AI-driven science uncover new answers that humans cannot understand? Host Kenneth Cukier finds out with James Field of LabGenius…

Researchers are using artificial intelligence techniques to invent medicines and materials—but in the process are they upending the scientific method itself? The AI approach is a form of trial-and-error at scale, or “radical empiricism”. But does AI-driven science uncover new answers that humans cannot understand? Host Kenneth Cukier finds out with James Field of LabGenius…