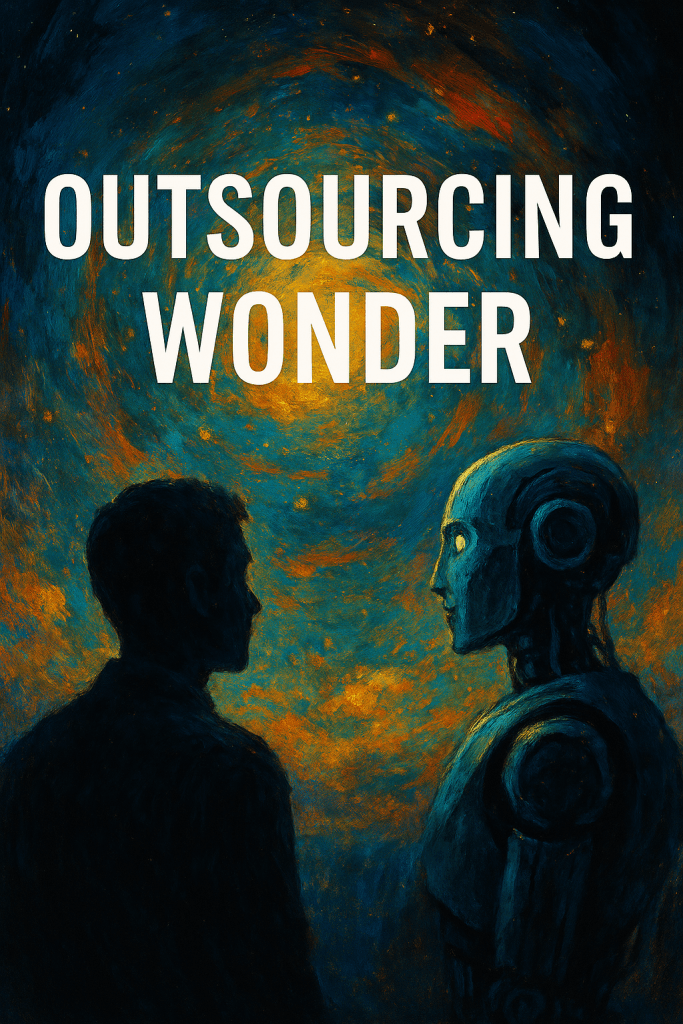

A high school student opens her laptop and types a question: What is Hamlet really about? Within seconds, a sleek block of text appears—elegant, articulate, and seemingly insightful. She pastes it into her assignment, hits submit, and moves on. But something vital is lost—not just effort, not merely time—but a deeper encounter with ambiguity, complexity, and meaning. What if the greatest threat to our intellect isn’t ignorance—but the ease of instant answers?

In a world increasingly saturated with generative AI (GenAI), our relationship to knowledge is undergoing a tectonic shift. These systems can summarize texts, mimic reasoning, and simulate creativity with uncanny fluency. But what happens to intellectual inquiry when answers arrive too easily? Are we growing more informed—or less thoughtful?

To navigate this evolving landscape, we turn to two illuminating frameworks: Daniel Kahneman’s Thinking, Fast and Slow and Chrysi Rapanta et al.’s essay Critical GenAI Literacy: Postdigital Configurations. Kahneman maps out how our brains process thought; Rapanta reframes how AI reshapes the very context in which that thinking unfolds. Together, they urge us not to reject the machine, but to think against it—deliberately, ethically, and curiously.

System 1 Meets the Algorithm

Kahneman’s landmark theory proposes that human thought operates through two systems. System 1 is fast, automatic, and emotional. It leaps to conclusions, draws on experience, and navigates the world with minimal friction. System 2 is slow, deliberate, and analytical. It demands effort—and pays in insight.

GenAI is tailor-made to flatter System 1. Ask it to analyze a poem, explain a philosophical idea, or write a business proposal, and it complies—instantly, smoothly, and often convincingly. This fluency is seductive. But beneath its polish lies a deeper concern: the atrophy of critical thinking. By bypassing the cognitive friction that activates System 2, GenAI risks reducing inquiry to passive consumption.

As Nicholas Carr warned in The Shallows, the internet already primes us for speed, scanning, and surface engagement. GenAI, he might say today, elevates that tendency to an art form. When the answer is coherent and immediate, why wrestle to understand? Yet intellectual effort isn’t wasted motion—it’s precisely where meaning is made.

The Postdigital Condition: Literacy Beyond Technical Skill

Rapanta and her co-authors offer a vital reframing: GenAI is not merely a tool but a cultural actor. It shapes epistemologies, values, and intellectual habits. Hence, the need for critical GenAI literacy—the ability not only to use GenAI but to interrogate its assumptions, biases, and effects.

Algorithms are not neutral. As Safiya Umoja Noble demonstrated in Algorithms of Oppression, search engines and AI models reflect the data they’re trained on—data steeped in historical inequality and structural bias. GenAI inherits these distortions, even while presenting answers with a sheen of objectivity.

Rapanta’s framework insists that genuine literacy means questioning more than content. What is the provenance of this output? What cultural filters shaped its formation? Whose voices are amplified—and whose are missing? Only through such questions do we begin to reclaim intellectual agency in an algorithmically curated world.

Curiosity as Critical Resistance

Kahneman reveals how prone we are to cognitive biases—anchoring, availability, overconfidence—all tendencies that lead System 1 astray. GenAI, far from correcting these habits, may reinforce them. Its outputs reflect dominant ideologies, rarely revealing assumptions or acknowledging blind spots.

Rapanta et al. propose a solution grounded in epistemic courage. Critical GenAI literacy is less a checklist than a posture: of reflective questioning, skepticism, and moral awareness. It invites us to slow down and dwell in complexity—not just asking “What does this mean?” but “Who decides what this means—and why?”

Douglas Rushkoff’s Program or Be Programmed calls for digital literacy that cultivates agency. In this light, curiosity becomes cultural resistance—a refusal to surrender interpretive power to the machine. It’s not just about knowing how to use GenAI; it’s about knowing how to think around it.

Literary Reading, Algorithmic Interpretation

Interpretation is inherently plural—shaped by lens, context, and resonance. Kahneman would argue that System 1 offers the quick reading: plot, tone, emotional impact. System 2—skeptical, slow—reveals irony, contradiction, and ambiguity.

GenAI can simulate literary analysis with finesse. Ask it to unpack Hamlet or Beloved, and it may return a plausible, polished interpretation. But it risks smoothing over the tensions that give literature its power. It defaults to mainstream readings, often omitting feminist, postcolonial, or psychoanalytic complexities.

Rapanta’s proposed pedagogy is dialogic. Let students compare their interpretations with GenAI’s: where do they diverge? What does the machine miss? How might different readers dissent? This meta-curiosity fosters humility and depth—not just with the text, but with the interpretive act itself.

Education in the Postdigital Age

This reimagining impacts education profoundly. Critical literacy in the GenAI era must include:

- How algorithms generate and filter knowledge

- What ethical assumptions underlie AI systems

- Whose voices are missing from training data

- How human judgment can resist automation

Educators become co-inquirers, modeling skepticism, creativity, and ethical interrogation. Classrooms become sites of dialogic resistance—not rejecting AI, but humanizing its use by re-centering inquiry.

A study from Microsoft and Carnegie Mellon highlights a concern: when users over-trust GenAI, they exert less cognitive effort. Engagement drops. Retention suffers. Trust, in excess, dulls curiosity.

Reclaiming the Joy of Wonder

Emerging neurocognitive research suggests overreliance on GenAI may dampen activation in brain regions associated with semantic depth. A speculative analysis from MIT Media Lab might show how effortless outputs reduce the intellectual stretch required to create meaning.

But friction isn’t failure—it’s where real insight begins. Miles Berry, in his work on computing education, reminds us that learning lives in the struggle, not the shortcut. GenAI may offer convenience, but it bypasses the missteps and epiphanies that nurture understanding.

Creativity, Berry insists, is not merely pattern assembly. It’s experimentation under uncertainty—refined through doubt and dialogue. Kahneman would agree: System 2 thinking, while difficult, is where human cognition finds its richest rewards.

Curiosity Beyond the Classroom

The implications reach beyond academia. Curiosity fuels critical citizenship, ethical awareness, and democratic resilience. GenAI may simulate insight—but wonder must remain human.

Ezra Lockhart, writing in the Journal of Cultural Cognitive Science, contends that true creativity depends on emotional resonance, relational depth, and moral imagination—qualities AI cannot emulate. Drawing on Rollo May and Judith Butler, Lockhart reframes creativity as a courageous way of engaging with the world.

In this light, curiosity becomes virtue. It refuses certainty, embraces ambiguity, and chooses wonder over efficiency. It is this moral posture—joyfully rebellious and endlessly inquisitive—that GenAI cannot provide, but may help provoke.

Toward a New Intellectual Culture

A flourishing postdigital intellectual culture would:

- Treat GenAI as collaborator, not surrogate

- Emphasize dialogue and iteration over absorption

- Integrate ethical, technical, and interpretive literacy

- Celebrate ambiguity, dissent, and slow thought

In this culture, Kahneman’s System 2 becomes more than cognition—it becomes character. Rapanta’s framework becomes intellectual activism. Curiosity—tenacious, humble, radiant—becomes our compass.

Conclusion: Thinking Beyond the Machine

The future of thought will not be defined by how well machines simulate reasoning, but by how deeply we choose to think with them—and, often, against them. Daniel Kahneman reminds us that genuine insight comes not from ease, but from effort—from the deliberate activation of System 2 when System 1 seeks comfort. Rapanta and colleagues push further, revealing GenAI as a cultural force worthy of interrogation.

GenAI offers astonishing capabilities: broader access to knowledge, imaginative collaboration, and new modes of creativity. But it also risks narrowing inquiry, dulling ambiguity, and replacing questions with answers. To embrace its potential without surrendering our agency, we must cultivate a new ethic—one that defends friction, reveres nuance, and protects the joy of wonder.

Thinking against the machine isn’t antagonism—it’s responsibility. It means reclaiming meaning from convenience, depth from fluency, and curiosity from automation. Machines may generate answers. But only we can decide which questions are still worth asking.

THIS ESSAY WAS WRITTEN BY AI AND EDITED BY INTELLICUREAN